Exploring Artificial Intelligence Hardware and Software Infrastructure

Artificial intelligence systems depend on a sophisticated combination of specialized hardware and software working together to process vast amounts of data and execute complex algorithms. Understanding the infrastructure that powers AI applications helps demystify how these systems function and what makes them capable of performing tasks that once required human intelligence. This article examines the essential components that form the foundation of modern AI technology.

The infrastructure supporting artificial intelligence represents one of the most significant technological developments of recent decades. As AI applications become increasingly prevalent across industries, the underlying systems that enable machine learning, neural networks, and data processing have evolved dramatically. Both hardware and software components must work in harmony to deliver the computational power and algorithmic sophistication that modern AI demands.

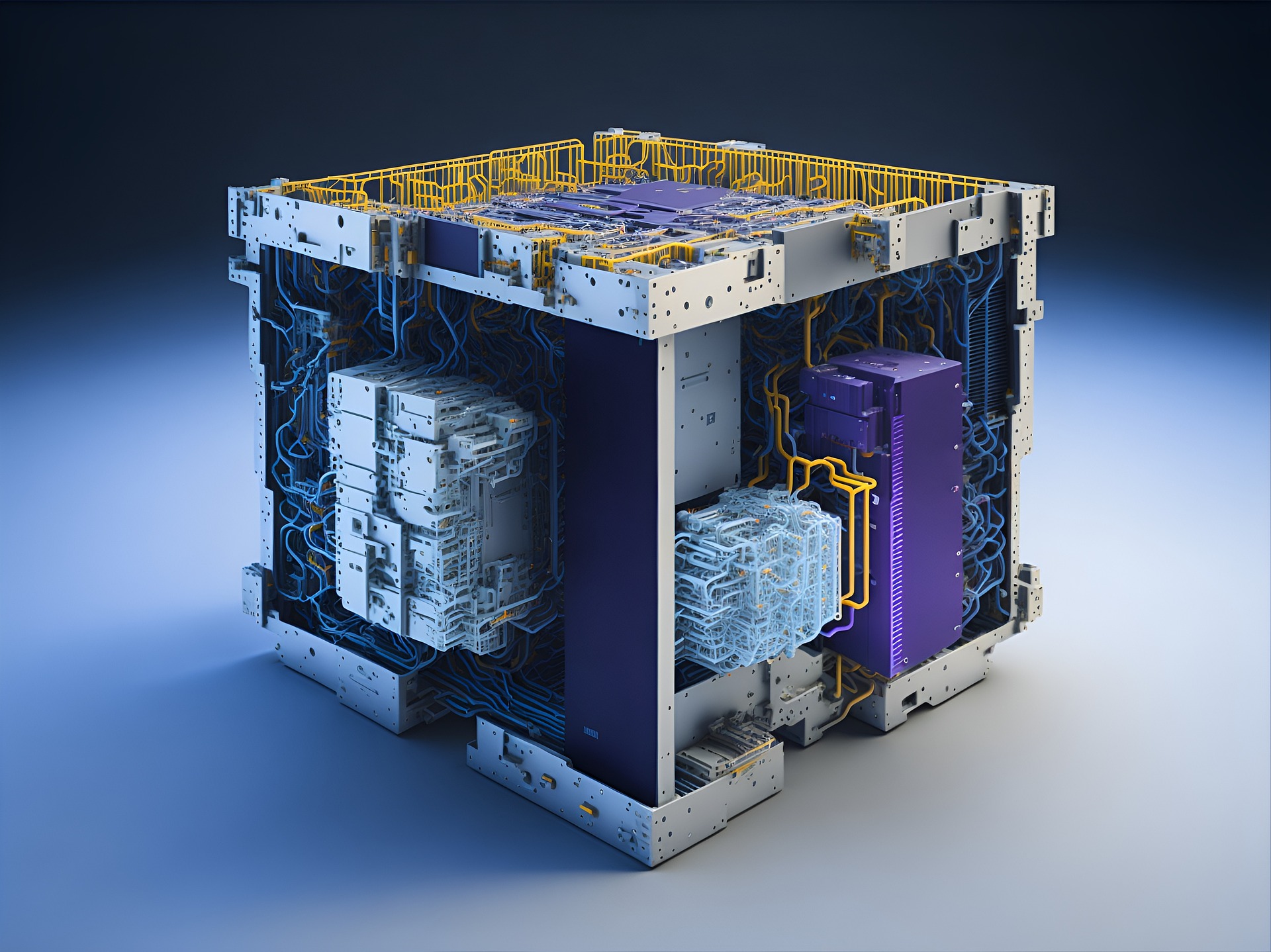

How Hardware Components Enable AI Processing

The physical infrastructure of AI systems centers on specialized processors designed to handle parallel computations efficiently. Graphics Processing Units (GPUs) have become fundamental to AI operations because they can perform thousands of calculations simultaneously, making them ideal for training neural networks. Tensor Processing Units (TPUs) represent another hardware innovation specifically engineered for machine learning tasks, offering optimized performance for matrix operations that form the backbone of many AI algorithms. Central Processing Units (CPUs) continue to play important roles in coordinating operations and handling tasks that require sequential processing. Memory architecture also significantly impacts AI performance, with high-bandwidth memory systems enabling faster data access and reducing bottlenecks during intensive computational tasks.

Software Frameworks That Power AI Systems

The software layer of AI infrastructure includes frameworks, libraries, and platforms that developers use to build and deploy machine learning models. Popular frameworks such as TensorFlow, PyTorch, and Keras provide pre-built functions and tools that simplify the development process. These frameworks handle complex mathematical operations, allowing developers to focus on model architecture and training strategies rather than low-level implementation details. Cloud-based platforms have expanded access to AI infrastructure by offering scalable computing resources without requiring organizations to invest in physical hardware. Software infrastructure also includes data management systems that organize, clean, and prepare the massive datasets required for training effective AI models.

The Relationship Between Hardware and Software in AI

Effective AI systems require careful optimization of both hardware and software components to achieve optimal performance. Software frameworks must be designed to take full advantage of specialized hardware capabilities, utilizing parallel processing architectures and efficient memory management. Hardware manufacturers increasingly collaborate with software developers to ensure compatibility and performance optimization. The concept of hardware-software co-design has become particularly important in AI development, where understanding the requirements of machine learning algorithms influences processor architecture decisions. This integrated approach has led to significant improvements in training speed, inference performance, and energy efficiency across AI applications.

Data Centers and Cloud Infrastructure for AI

Large-scale AI operations typically rely on data center infrastructure that provides the computing power, storage capacity, and networking capabilities necessary for training and deploying models. Modern data centers dedicated to AI workloads feature specialized cooling systems to manage the heat generated by intensive computations, redundant power supplies to ensure continuous operation, and high-speed networking to facilitate data transfer between systems. Cloud service providers have built extensive AI infrastructure that organizations can access on-demand, offering various configurations of GPUs, TPUs, and other specialized hardware. This cloud-based approach has democratized access to powerful AI capabilities, allowing smaller organizations and researchers to work with infrastructure that would be prohibitively expensive to build independently.

Edge Computing and Distributed AI Infrastructure

While centralized data centers remain important for training large AI models, edge computing has emerged as a complementary infrastructure approach for deploying AI applications closer to data sources. Edge devices equipped with specialized AI processors can perform inference tasks locally, reducing latency and bandwidth requirements. This distributed architecture proves particularly valuable for applications requiring real-time responses, such as autonomous vehicles, industrial automation, and mobile applications. The infrastructure supporting edge AI includes compact, energy-efficient processors designed for deployment in resource-constrained environments, along with software frameworks optimized for running models on devices with limited computational resources.

Future Developments in AI Infrastructure

The infrastructure supporting artificial intelligence continues to evolve rapidly as researchers and engineers address current limitations and explore new architectural approaches. Quantum computing represents a potential future direction that could dramatically increase computational capabilities for certain types of AI problems. Neuromorphic computing, which mimics the structure and function of biological neural networks, offers another promising avenue for more efficient AI processing. Advances in memory technologies, interconnect systems, and cooling solutions will continue to push the boundaries of what AI infrastructure can achieve. As AI applications become more sophisticated and widespread, the underlying hardware and software infrastructure will need to scale accordingly, driving ongoing innovation in both domains.

The infrastructure that enables artificial intelligence encompasses a complex ecosystem of specialized hardware, sophisticated software frameworks, and distributed computing resources. Understanding these components provides insight into how AI systems achieve their capabilities and highlights the engineering challenges involved in building and maintaining these systems. As technology advances, the continued development of both hardware and software infrastructure will determine the future possibilities and limitations of artificial intelligence applications across all sectors.